How is life in Debian testing? Well, it’s a life made of decisions. New versions of packages are entering Debian every day, usually tens, or maybe even hundreds of them. If I update, maybe I will break something, so I’m a bit reluctant to update frequently. But if I don’t, the update tomorrow will be a bit larger, maybe a bit more risky. So, I need to decide every day between risking to upgrade, or stay with my system as it is.

This is a decision familiar to anyone maintaining any software installation. Every time you deploy a system in production, you need to decide when to upgrade to a newer version, or how long to stick to the current one. And that decision is difficult, specially if the system “just works” (you know: “if it works, don’t touch it”). Upgrading to newer versions is always risky, and specially if the system is composed of a large collection of components working together, as most modern deployments are. But not upgrading is also problematic. You are not benefiting of new functionality, performance improvements, and other goodies that new versions may bring. But you are also missing fixed bugs, or solved security vulnerabilities, which could cause a lot of trouble in the future. So, when to upgrade?

This decision is usually done by rule of thumb: when “it seems” that the system needs to be upgraded. But anyone making these decisions, even when having a lot of experience in them, would like to have some assistance. Something that lets a more informed decision to be made. Something that helps in balance reasons to upgrade with reasons to stay. And here is where Paul Sherwood and me came up with the concept of “technical lag”, which we presented for the first time in the Open Source Leadership Summit (slides) and in the International Conference on Open Source Systems in 2017.

The idea of technical lag is simple: like technical debt is measuring the cost of not doing things the way they should be done in the first place, technical lag measures the cost of not upgrading to the ideal release of a system. Of course, ideal may vary from situation to situation: it may be the latest available version, the most stable, or the one with less known unfixed bugs or vulnerabilities. So, technical debt proposition is: “let’s measure how far away our deployment is from the ideal deployment we would like to have”. For that, we need to our ideal, and a way of measuring the difference with that ideal: for example, we can compute it as the period between release dates, or as the number of bugs fixed between our release and the ideal. Depending on how we do that, we will have different kinds of technical lag, which will provide different information about the cost of not upgrading.

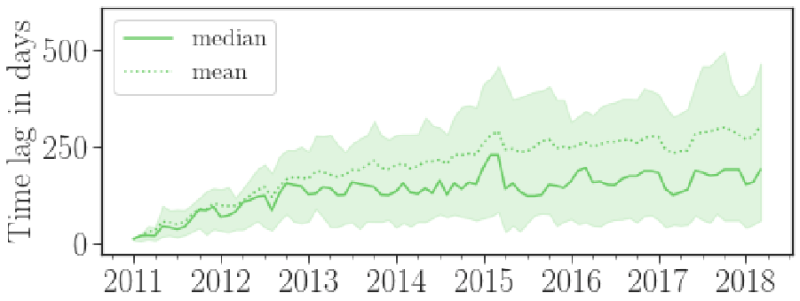

Evolution of technical lag over time (measured in days since publication) of npm packages. Chart created by Ahmmed Zerouali

When the deployment is composed of a collection of components, as is usually the case, we propose to compute technical lag as the aggregation of technical lag for all the components, since each of them could, maybe, be updated to newer versions, and therefore will show some lag. Depending on how we compute the aggregation (it can be just the maximum of all lags, or the addition of them all, or the mean, etc.) we have, again, different information about the cost of not upgrading. For example, we may want to have a deployment where no component is more than six weeks old, or where the aggregated number of known unfixed bugs is less than 30.

In most cases, collections of components include the concept of dependency. The idea of a dependency is that to install a certain component, we need to install certain versions of other components, which it needs to work. Dependencies are well known since the early 1990s, when first Linux-based distributions introduced them, but are nowadays present in most coordinated collections of software, like npm JavaScript packages, or pypi Python packages. Dependencies and constraints on dependencies mean that a system installed right now, with the latest possible versions, may already show some technical lag. That may happen because constraints force some of the components to stay in some old version, maybe because of changes in APIs, that other components didn’t follow.

Last but not least…

During the last year I’ve been exploring with other researchers how technical lag works in several scenarios: Debian packages, npm libraries, or Docker containers. We’re finding the concept to be useful in all of them, and easily extensible to many more. And it is helping us to find out when deployments dramatically differ from the ideal, how specific ecosystems (such as npm or pypi) impose some minimum lag due to how they handle dependencies, or whether a certain system is improving over time or not, once specific goals on technical lag are set.

For a recent presentation on the concept of technical lag, you can check out the slides of my latest talk on the matter. Now, I’m exploring how to make the concept fully operational for people maintaining deployments, with other researchers and developers. If you find the idea interesting, please let me know. I’m very interested in getting feedback about it.