The adoption of Python notebooks to perform data analysis has considerably increased, becoming a de-facto standard within data scientists communities. But, which Python libraries are used on them?

Notebooks are interactive human-readable documents, which contain the analysis description and the results (e.g, figures, tables) as well as the Python code used to perform data analysis. Thus, notebooks help scientists to both keep track of the results of their work, and make it easy to share the code with others.

Inspired by this blog post about the most useful open source python libraries for data analytics in 2017, where in addition of libraries description and category, the author gave insights about how popular these libraries are, using metrics such as number of commits and contributors extracted mainly from their home repository in Github, we are going to run some analysis from different perspectives.

We believe it is worth studying how Python notebooks are developed and whether their development differs from common software development coding practices (e.g., naming conventions, code simplicity, testing).

In this first post, we focus on how notebooks are developed, in particular:

- which libraries they use

- which libraries are used together

- how they are imported

We build our analysis on a dataset composed of 2,702 IPython/Jupyter notebooks randomly sampled from GitHub.

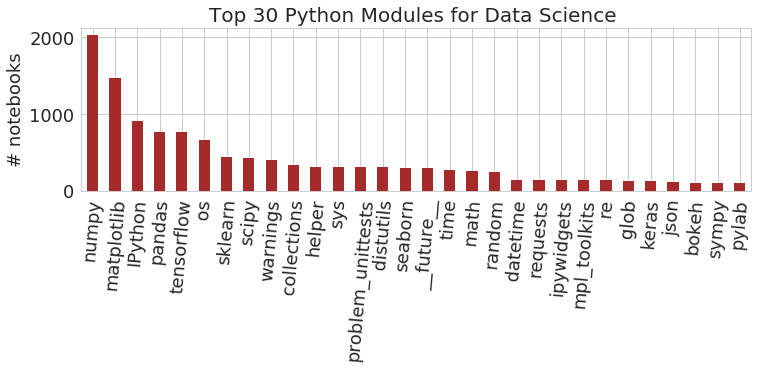

What are the most used libraries?

Figure 1 shows the top 30 Python libraries found in our dataset. As expected, well-known data science libraries such as NumPy, Matplotlib, Pandas are also the most used ones. TensorFlow also appears to be widely used in our dataset, which confirms its undisputed relevance in machine learning (as reported by the latest StackOverflow survey). The presence of IPython in the top list is not surprising since it is a support library for notebook functionalities.

Standard libraries are also in the top libraries within our dataset, for instance os and sys are used to interact with the operating system, while datetime and time to manipulate dates and times.

Interesting enough is the presence of problem_unittests, which is a script that embeds unittest methods tailored to test deep learning functions.

In order to give more insights about what data science libraries are used in notebooks, we analysed their categories (which have been collected from this previous blog post).

The following table lists the use of the most popular data science libraries in our dataset. As can be seen, some libraries are so much used than others within the same category. For instance, Scipy overtakes StatsModels, TensorFlow is widely used in machine and deep learning, and Matplotlib is the preferred option for visualizing data instead of Seaborn (used for statistical visualizations), Bokeh and Plotly (useful for interactive visualizations).

| Core libraries | Data mining & stats | Machine & deep learning | Visualization | NLP |

|---|---|---|---|---|

| NumPy (2024) | SciPy (429) | TensorFlow (760) | Matplotlib (1466) | Gensim (85) |

| Pandas (764) | StatsModels (88) | SciKit-Learn (435) | Seaborn (302) | NLTK (64) |

| SymPy(101) | Scrapy (2) | Keras (121) | Bokeh (101) | |

| Fastai (87) | Plotly (58) | |||

| Theano (77) |

Which libraries are used together?

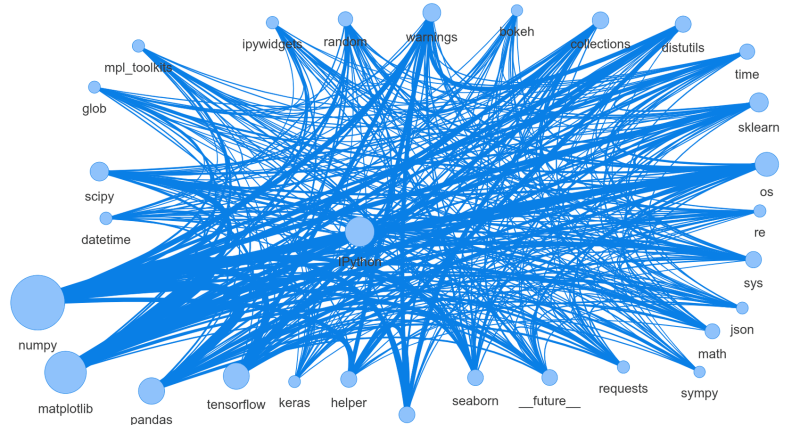

In order to identify how libraries are used together, we built a network graph using the vis.js library. A node represents a library, and its size depends on the number of times that library was used. An edge exists when two libraries have been used together within a notebook.

The size of an edge represents the number of times the corresponding pair of libraries were used together.

Figure 2 shows the network for the top 30 libraries in our dataset. As can be seen, the network is highly connected, meaning that most libraries are used together.

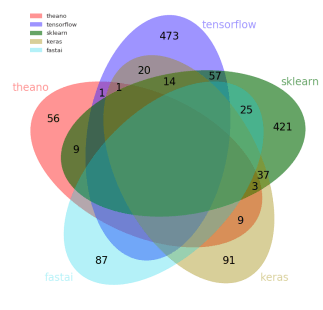

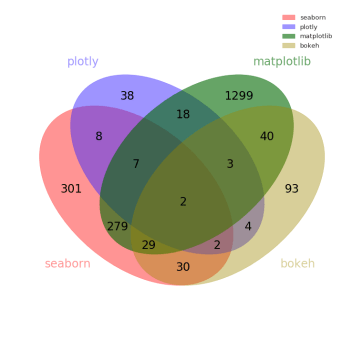

In order to better explore our dataset, we focus on understanding whether libraries of the same category are used together. For each category, we built a venn diagram to highlight the pairs of libraries used simultaneously in notebooks. The figures below (Figure 3) shows the results obtained for deep/machine learning (on the left) and visualization (on the right) categories.

As can be seen, deep/machine learning libraries are mostly used individually, while this does not seem to be the case for visualization libraries.

How are libraries imported?

In Python programming, there exist different ways to import a library. However, they can be summarized in importing the whole library (e.g., import numpy) or import specific packages within the library (e.g., from numpy import array). By analyzing how libraries are imported, it is possible to derive some common practices.

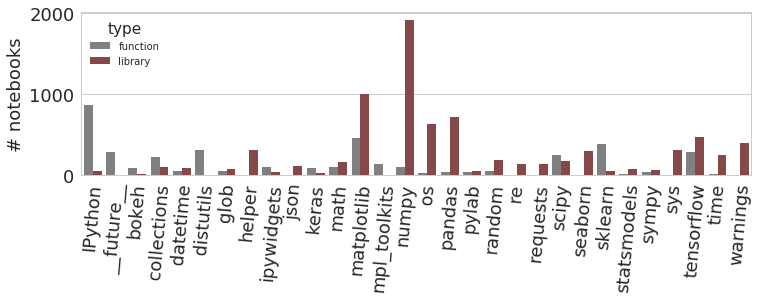

Figure 4 shows the number of notebooks in which libraries where fully (red) or partially (gray) imported. As can be seen, core libraries, such as NumPy and Pandas, are generally fully imported. While for other libraries, such as SciPy and Scikit-Learn, only specific packages are imported. This difference can be explained by the fact that these practices are learned from the documentation of these libraries (e.g. Seaborn, SciPy).

We also checked how many times a given library is imported in the same notebook, as expected we found that importing specific packages from the same library is very common. However, we also found that in some notebooks, some libraries were fully imported many times. A manual inspection revealed that this happened for notebooks used like courses and tutorials, where each cell represented an independent program.

Conclusion

In this first post, we carried out an explorative analysis on the use of Python notebooks by using a small dataset obtained from GitHub. The most used core libraries are Numpy and Pandas, while Scipy, TensorFlow and Matplotlib are the most used libraries for data mining, machine learning and visualization. Most notebooks tend to use many libraries together, however, deep/machine learning libraries are generally not used in combination with other ones of the same category, while this does not seem to be the case for visualization libraries.

Libraries in notebooks are generally fully imported, however importing libraries practices are mostly learned from official documentation.